Source : NEW INDIAN EXPRESS NEWS

In pursuit of the AI dream, the tech industry this year has plunked down about $400 billion on specialized chips and data centers, but questions are mounting about the wisdom of such unprecedented levels of investment.

At the heart of the doubts: overly optimistic estimates about how long these specialized chips will last before becoming obsolete.

With persistent worries of an AI bubble and so much of the US economy now riding on the boom in artificial intelligence, analysts warn that the wake-up call could be brutal and costly.

“Fraud” is how renowned investor Michael Burry, made famous by the movie The Big Short, described the situation on X in early November.

Before the AI wave unleashed by ChatGPT, cloud computing giants typically assumed that their chips and servers would last about six years.

But Mihir Kshirsagar of Princeton University’s Center for Information Technology Policy says the “combination of wear and tear along with technological obsolescence makes the six-year assumption hard to sustain.”

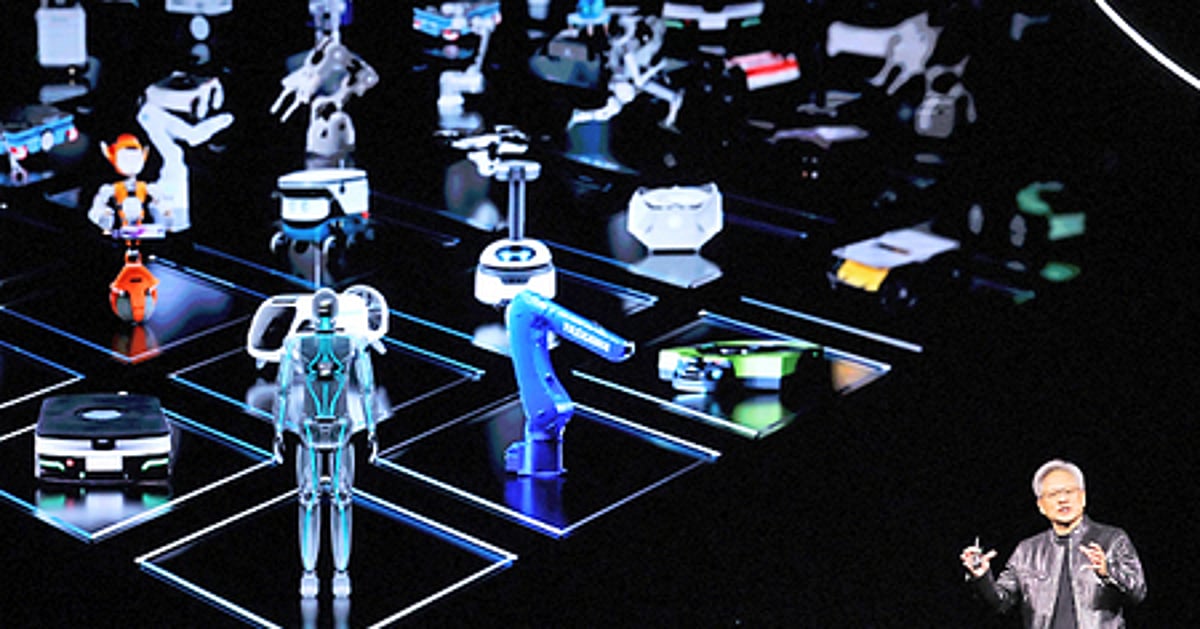

One problem: chip makers — with Nvidia the unquestioned leader — are releasing new, more powerful processors much faster than before.

Less than a year after launching its flagship Blackwell chip, Nvidia announced that Rubin would arrive in 2026 with performance 7.5 times greater.

At this pace, chips lose 85 to 90 percent of their market value within three to four years, warned Gil Luria of financial advisory firm D.A. Davidson.

Nvidia CEO Jensen Huang made the point himself in March, explaining that when Blackwell was released, nobody wanted the previous generation of chip anymore.

“There are circumstances where Hopper is fine,” he added, referring to the older chip. “Not many.”

SOURCE : NEWINDIANEXPRESS